Throughout our different experiences working with AAA game studios, HUD creation in particular seemed to circulate pretty much around the same tool-chain more or less: Flash as the editor and some run-time library that can take the resulting SWF files and render them in-game (be it internally-developed or 3rd party like Scaleform rest-in-peace).

An important part to the popularity of this setup came from its easy workflow for creating/animating/integrating HUD elements in the game. Things have changed since then though, and nowadays 3D elements seem to be the trend, which is not quite a standard thing one can easily author for in Flash.

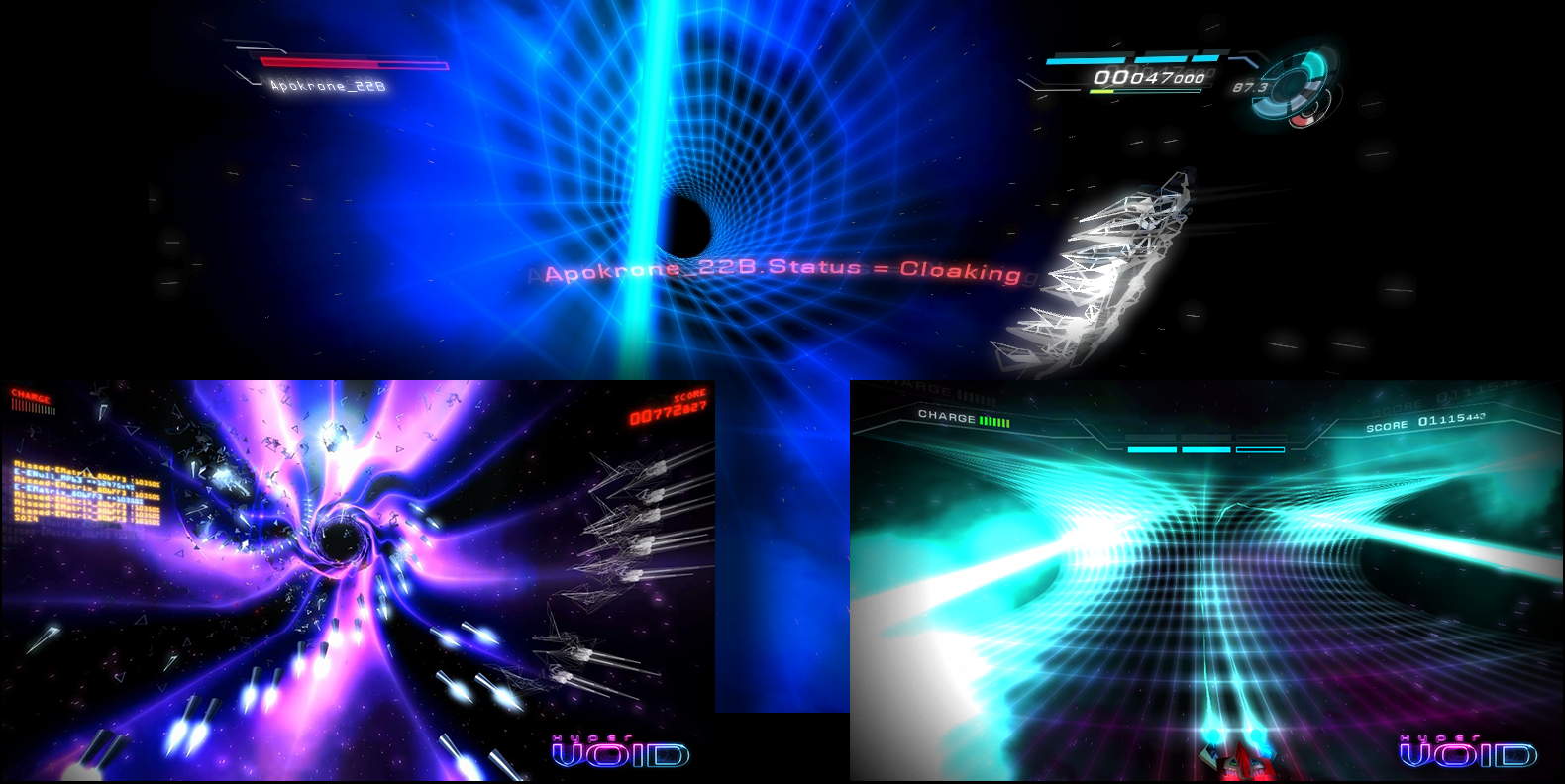

When we started designing Hyper Void's HUD, we wanted to give the player the feeling of depth and holographics, which meant the use of true 3D elements in addition to 2D. We figured though we don't want to push the 3D holographic style too much as Iron Man for example. Unlike the detailed and complex HUD of Iron Man (that even Stark has a hard time following it), Hyper Void's challenge was to achieve a simplified HUD that is clear and easy for the player to understand and track amidst the dense and fast-paced action of the game. One key element to success in this challenge was to have fast iteration time for drafting the design and testing in the final game view.

To simply just extend the common HUD pipeline to 3D HUDs, we would end up requiring the involvement of 3 different packages: first, a 2D vector/pixel software to author the UI elements art. Second, a 3D software for creating 3D elements used to carry the 2D art. And finally, the game editor which combines all assets together and where one is able to visualize the final results in the final game. This is definitely not sounding like fast iteration to us. And being the techies we are, we wanted to experiment with a workflow other than what we've been accustomed to in other companies.

So, is it possible to combine all previous steps into one seamless process from design to visualization? The answer is yes! And this is how we did it:

By leveraging the integrated 2D vector/pixel painting system (called Softimage Illusion) with the standard 3D animation functionalities in Softimage, we could actually paint and model at the same time. With this setup, we design the element and see it projected on the 3D elements as it is painted. Moreover, by having the same camera setup from the game along with game play videos in the viewport background, we were able to design the holographic HUD and experience it immediately as if it was happening in the final game.

For testing the behavior of specific HUD elements, we utilized the node-based system in Softimage to prototype the game logic that controls the HUD elements similar to how they are controlled in our game editor. Luckily, our game editor's node-based system has very similar nodes.

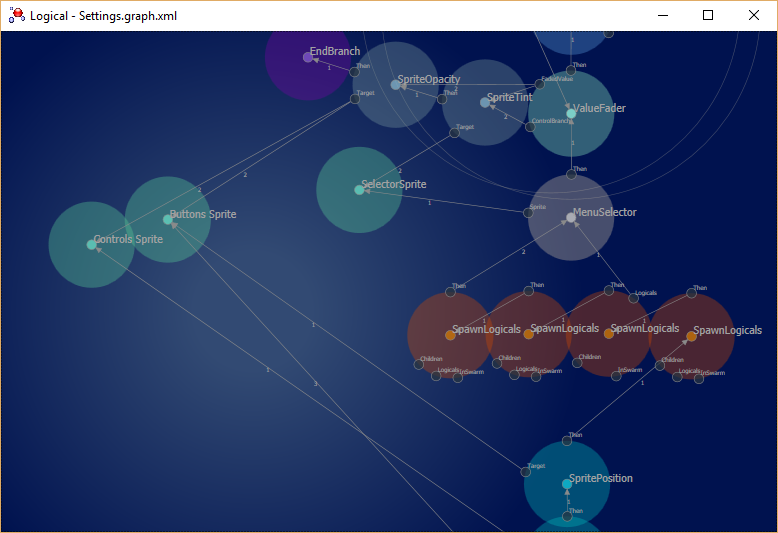

The screenshot below is taken from our in-house engine's UI logic editor. If you are familiar with Softimage Illusion's nodes, then you can probably already see some equivalent nodes here such as opacity, tint and positioning of elements.

What is great about this workflow is that without writing a line of code we were able to have a modern HUD design workflow with immediate feedback. Additionally, it has the following qualities:

- Procedural and non-destructive. Everything in Softimage is node-based and every stroke is recorded in the construction history of its nodes.

- Resolution-independent. Allows blending between vector and pixel techniques.

- Full 3D modeling toolset.

- Content exportable using the existing 3D pipeline. No need to build SWF files.

- Doesn't rely on Flash/Scaleform (at least for us, we consider this a quality).

This video shows a little doodling with the mirroring operator activated:

The following image shows some of the iterations for the HUD in-game. Thanks to this worflow, trying different styles was fast and fun ☺

Obviously, with all this nice stuff there are always a few hiccups and limitations. First, we had to deal with the lack of a vector text renderer in Softimage Illusion. It is possible to implement this node using the SDK, but we didn't do that. However, we managed to develop another workflow to generate the text without any coding (this might be another blog post). Another issue was the stability of Softimage Illusion. Unfortunately Autodesk didn't invest a lot in fixing Softimage Illusion's bugs even in their latest release (2015 SP2) 😕.

In the end, it was a great and rewarding experience. Being able to experiment with techniques outside the common stream feels great and is one big motivation in our daily work. We highly recommend you to try it out sometime... ☺